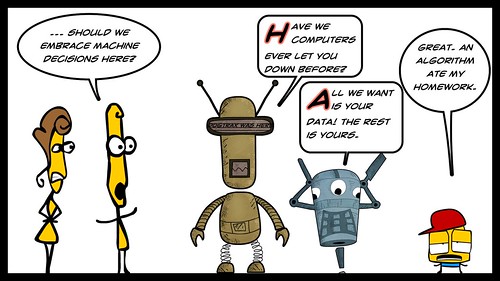

I really appreciate how reading other people’s blog posts in the E-Learning 3.0 community sparks my thinking. A piece by Matthias in reference to Stephen Downes’ exploration of badging as possible tracking system or as an outcome of automated assessments in a decentralized Internet made me pause.

Matthias writes:

I think, for the final summative assessments deciding about the future life of a human, such algorithms are not acceptable. By contrast, for the formative assessments throughout the study, they might be perfect. With human teachers, both types of assessments are equally costly, therefore we have too few of the latter and too many of the former.

Stephen writes:

We are in the process of building society-wide automated competency recognition systems. These are already being developed for training, for compliance, for civic justice, and for credit and insurance assessment.

So far – as Matthias Melcher suggests – the only people not benefiting are the learners themselves, with their own data. And that’s what can and must change.

I am reminded of the debates still raging in my own teaching field about machine-based scoring of writing, where a software program analyzes a text and scores it. My Western Massachusetts Writing Project colleagues (and mentors and former professors) Anne Herrington and the late Charlie Moran explored this notion of computerized grading of writing, and found it incredible lacking on many fronts. I think their research still holds true today.

You can see the collection of pieces they curated for the National Writing Project.

And there is the part of the statement by the National Council of Teachers of English, which concludes:

“Writing-to-a-machine violates the essential nature of writing.”

This all came to mind as I read and then re-read Matthias reflection, in which his position is to separate out the formative work from the summative work, and to consider whether machine learning and algorithmic software might help in the formative stages, but would come into conflict with our understanding of teaching and learning in the summative. And, he suggests, if machine learning helps fill the gaps in the formative stages, an educator might have more time and energy for the summative work.

Note: I am focusing my reflections on the teaching of writing here, which may twist Matthias’ own focus a bit. I think he had a different lens in mind.

Perhaps this idea of machines for formative assessment would be helpful in some academic fields or in the larger concept of life’s learning experiences. In particular, when learning is happening and unfolding across multiple platforms and different spaces, the machine program might do a better job of tracking progress than us people do. (I think this is one of Stephen’s points — that the Decentralized Web makes it more difficult to curate your learning experiences and that algorithms might help solve this problem. Is this where block chain comes in?)

But I am a writer and a teacher of young writers, so this conversation took me in another, not unrelated, direction of thought. In writing, the formative path to a finished piece is actually where the learning and the teaching takes place. It is in the brainstorming, the drafting, the revision for mechanics and audience, the reworking … this is where the “writing happens.” And it is mostly formative, made deeper and richer when the teacher confers with the writer. It’s not a task best left for isolation.

I’d resisting having a software program tracking the path of writing like this, with word counts, and syntax reviews, and whatever else the criteria might be to evaluate a text. I’ll admit that the AI component of technology has made intriguing advancements in this field, but not enough for me to jump on board. So would one argue that it might be better for summative assessment? Um. Nope. Thinking of the machine as your audience in a final piece of writing goes against the grain of the power and intent of writing, if writing is to be authentic.

Which is not say this is not already happening. Look to many standardized testing platforms now in use across the board, in student assessments and in teacher training programs, and the shift is decidedly towards machine scoring. This is more cost-saving than quality assessment (you don’t have to pay human scorers), meaning the shift towards computers as scorer is being done for all the wrong reasons: to cut costs, not to increase learning.

At least, this seems true in the field of writing.

Peace (program it),

Kevin