I often make music to go with poems, but tonight, I write a poem to go with some music.

Peace (In Night’s Sky Eyes),

Kevin

I submitted some poems with audio to the Studio Cannon poetry “audio zine” and got all three of my poems accepted for the latest issue. The poems, which I had written as part of my morning writing routine, were Crane, Guitar and Storm. The online journal focuses primarily on audio voice, so each poet narrates their verse. I like that focus on speaking and listening, and online audio.

Peace (and Sound),

Kevin

I am not sure how to even categorize this but I found this interesting prose/poem tool from an online journal called ‘the html review’ and went into the platform, wanting to try to figure out how I could use it. The tool — Prose/Play by Katherine Yang — allows you to layer words and then when you hover, the words appear. But the randomizer tool randomizes the selections across the piece, creating a rather interesting effect you click click click. There’s no save or share button at the site, so I did a screencast video to capture the motion.

On the left of the screen, you can see my “coded” words, and then right is the poem itself in motion.

Peace (Layered in Wonder),

Kevin

I am looking forward to an exploration of AI tools within the ETMOOC2 community soon to be unfolding until the title of Artificial Intelligence And You. I’ve already been doing similar explorations within the NWPStudio with my National Writing Project friends, and ETMOOC was one of the first connected MOOCs (but I was not part if). In fact, ETMOOC helped pave the way for CLMOOC (which I helped facilitate from the start and its long tail continues even today).

I noticed a call for adding a slide to a Google Slideshow, and wondered how I might use that invitation to do something to represent myself in the community.

To gear up, and to play around (there’s that CLMOOC ethos at work) with AI, I decided to ask ChatGPT about how a MOOC might explore AI. It’s reply was fine and interesting, and I realized I wanted to do something with the reply but what? At first, I tried to use Hypothesis with the reply, and I think it worked but I wasn’t sure, and I need to go back to see how notes in the margins might work with ChatGPT.

Instead, I decided I wanted to make art out of the reply. I grabbed its response as a screenshot and then went into Dall-E2 to create art. This took quite a few queries to get what I wanted along the lines of a connected community and learning and artificial intelligence. It kept adding garbled text to the generative art, but then I landed on this theme of sketched character holding hands, connecting around a planetary body.

Instead, I decided I wanted to make art out of the reply. I grabbed its response as a screenshot and then went into Dall-E2 to create art. This took quite a few queries to get what I wanted along the lines of a connected community and learning and artificial intelligence. It kept adding garbled text to the generative art, but then I landed on this theme of sketched character holding hands, connecting around a planetary body.

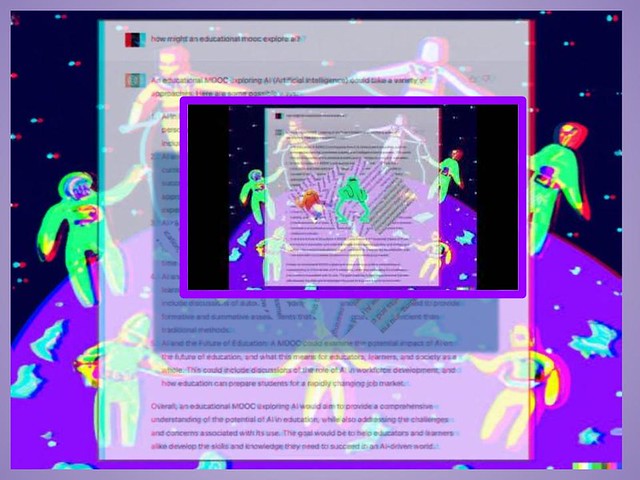

I then took both the text screenshot and the artwork and folded them together on the Google Slide, using the fader tool to make the art bleed through the surface of the text. I downloaded that slide as an image file. I knew I wanted to do something else, something to show the off-kilter nature of AI in its disruptive nature.

I then took both the text screenshot and the artwork and folded them together on the Google Slide, using the fader tool to make the art bleed through the surface of the text. I downloaded that slide as an image file. I knew I wanted to do something else, something to show the off-kilter nature of AI in its disruptive nature.

I landed on using an iPad app called Fragment, which does what it says: it takes an image file and breaks it into pieces, like a broken mirror. There are some other effects within Fragment that allow for slight filters. I found one by just randomizing the controls. The one I saved takes the center of the file, and breaks into what seem to be smaller windows, tumbling out of the file. Metaphorical imagery, it occurred to me as I was doing it, given our confusion over where AI might be going, and taking us with it.

I landed on using an iPad app called Fragment, which does what it says: it takes an image file and breaks it into pieces, like a broken mirror. There are some other effects within Fragment that allow for slight filters. I found one by just randomizing the controls. The one I saved takes the center of the file, and breaks into what seem to be smaller windows, tumbling out of the file. Metaphorical imagery, it occurred to me as I was doing it, given our confusion over where AI might be going, and taking us with it.

Perfect. I liked that fragmented image of text falling out of the center. Then later, I decided I wanted to go another step forward with more media layers, adding music to the fragmented image. I’ve done my own exploring of AI music generators, which I find lacking right now. Which is too bad because an AI soundtrack would have made sense. Instead, I ended up using found music loops inside Soundtrap, but I did so by searching for ones that captured the sense of disruption and that had a sort of machine-like quality to the loops. I called what I made BotBeat (AI Explorations).

Perfect. I liked that fragmented image of text falling out of the center. Then later, I decided I wanted to go another step forward with more media layers, adding music to the fragmented image. I’ve done my own exploring of AI music generators, which I find lacking right now. Which is too bad because an AI soundtrack would have made sense. Instead, I ended up using found music loops inside Soundtrap, but I did so by searching for ones that captured the sense of disruption and that had a sort of machine-like quality to the loops. I called what I made BotBeat (AI Explorations).

I wasn’t yet done, though. I found that I really wanted a dance party (more echoes of CLMOOC, where a collaborative dance party we once created remains a top moment for many of us). So I went into Giphy and created a series of animated gifs with dancing creatures layered on top of the fragmented Chat/DallE image with the BotBeat as the music to dance to. This was the final gif.

I then took those gifs into iMovie, adding the song as the audio layer and, done! I have since layered the video dance party on top of the slideshow with the original image mix, but added shadow effect to make it seem like the dance party was in the air above the slide.

Why do all this? Partially because I was curious about where my own curiosity might take me — I didn’t have a real plan when I started but followed my instinct and interest – and partially because I think it is incumbent on us to not feel satisfied with what these new AI tools feed us. We need to be pushing the AI tools, but doing that by tapping into what makes us human and unique: artistic vision, experimentation and expression, whether through poetry, music, art or whatever inspires us.

Why do all this? Partially because I was curious about where my own curiosity might take me — I didn’t have a real plan when I started but followed my instinct and interest – and partially because I think it is incumbent on us to not feel satisfied with what these new AI tools feed us. We need to be pushing the AI tools, but doing that by tapping into what makes us human and unique: artistic vision, experimentation and expression, whether through poetry, music, art or whatever inspires us.

Peace (and Bots),

Kevin

PS — a note on blog title grammar — I know my blog post title here — The Artistic Line Between AI and I is wrong, but I like the flow of it. If I had “me” in there, eh, it wouldn’t work. For I, anyway (hahaha).

Prompt: https://www.ethicalela.com/rest-resist-composing-a-haibun-poem/

Added later: a blackout found poem from within that haibun poem

Peace (and poems),

Kevin

This poem caused me a lot of writing headaches throughout the day, starting with the one word that was the prompt to build a poem off of: keel. I went metaphorical and nautical, but my earlier draft versions were so, eh, off, that I kept returning to the poem throughout the day, tinkering and revising, removing and adding, until I landed on something vaguely acceptable.

It’s a love poem, as it should be.

Peace (and Partners),

Kevin