The prompt for DS106 Daily Create today is all about the International Day of Friendship. I made this loop track for all of my CLMOOC friends out there. You know who you are.

Peace (and song),

Kevin

The prompt for DS106 Daily Create today is all about the International Day of Friendship. I made this loop track for all of my CLMOOC friends out there. You know who you are.

Peace (and song),

Kevin

I went with some friends to a screening of a jazz music documentary on legendary Buster Williams (who played with many jazz greats) and it was a wonderful movie. It’s called Buster Williams: From Bass To Infinity.The director was there, explaining his approach and answering questions.

Williams comes across as a such a thoughtful, kind man, and musician. There were some animation sequences over his voice, telling his stories of early gigs with Sonny Stitt and Gene Ammons that were quite interesting, too.

Peace (and sound),

Kevin

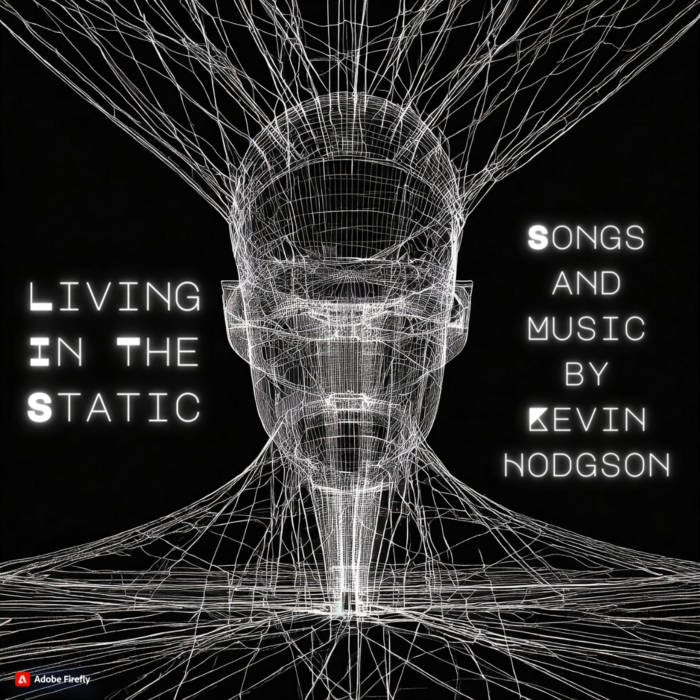

I wrote a collection of new songs since January and then recorded them, and released them as the album on Bandcamp: Living In The Static. It’s free to listen, if curious.

Peace (and music),

Kevin

This morning’s prompt at DS106 Daily Create had to do with using an old Public Domain image of a bunch of men standing on the deck of an old, tilting house, and to imagine them as a band releasing a song. I used Canva to design their “single” track, which I called Living In A Tilted World.

I decided to go a step further, using the Suno AI Music site to create a fake song to go along with the fake band. I tried Suno out a few weeks ago and in that short time, I think it has gotten better with a new version of its algorithms (which is both fascinating and alarming, as a creative person worried about the intrusion of AI in our spaces).

I instructed Suno to produce:

an old sea shanty with the title of “living in a tilted world” about a house tilting into the ocean

Take a listen to the Suno single: Tilting Tides.

Catchy, right?

Peace (in the great fake out),

Kevi

Since last year, I have been playing around and experimenting with the emergence of AI tools that create music (see earlier posts). Some of it has been interesting. Most have been pretty bland. As someone who makes music, but who keeps an open mind about technology, I’ve been trying to keep tabs on things (as best as one can do).

Suno, a new site to me but I guess it has been around for a few months, is quite different in the way that it integrates music, voice and lyrics in its AI production, and it quite a leap forward from the other sites I have tinkered with.

It works like a sort of a Chatbot — you write in a theme or topic and suggest a music style and it works to craft a short blurb of a song, with music and with vocals singing words generated by some sort of ChatGPT text. The quality here is much higher than other sites I have played with.

And the results, while still formulaic and a bit generic in sound, are interesting and show how songwriting and music production are the next wave of these AI tools. They may never supplant musicians and songwriters (he writes, hopeful) but they do demonstrate how making song tracks could become rather automated in the time ahead of us, either for commercial products or some other means.

Listen to a song I had Suno generate about our dog, Rayna, and her days snoozing on the couch.

Then listen to a song I had it create about Duke Rushmore, a fictional musician whose name is the name of my read rock and roll band.

Weird, right?

Peace (and song),

Kevin

The prompt for this morning’s Daily Create via DS106 was to use Scrap-Coloring to color an image or file. I’ve used the site before and it’s easy to get lost in it, particularly if the image has a lot of little details. I chose the cover design of my album of sound sketches from last year. (take a listen).

Peace (and sound),

Kevin

I’ve been trying to get myself into a regular routine again of writing songs, and taking any kernel of something to the next steps, of demo recording and maybe making a video to go with it. This one — Constellations (Moments Left Behind) — might still need some work but I like the way I am trying to use constellation stories as a way to frame our own experiences. I think it sort of works.

Peace (and sound),

Kevin