First, it was Wikipedia that would be the end of student research. Then it was Google and other search engines that would be the end of student discovery and learning of facts and information. Now it might be ChatGPT that might be the end of student writing. Period.

As with the other predictions that didn’t quite pan out in the extreme but still had important reverberations across learning communities, this fear of Machine Learning Chat may not work itself out as extreme as the warnings already underway in teaching circles make it seem, but that doesn’t mean that educators don’t need to take notice about text-based Machine Learning systems, a technology innovation that is becoming increasingly more powerful and user-friendly and ubiquitous.

For sure, educators need to think deeply about what we may need to do to change, adapt and alter the ways we teach our young writers what writing is, fundamentally, and how writing gets created, and why. If students can just pop a teacher prompt into an Machine Learning-infused Chat Engine and get an essay or poem or story spit out in seconds, then we need to consider about what we would like our learners to be doing when the screen is so powerful. And the answer to that query — about what can our students do that machine learning can’t — could ultimately strengthen the educational system we are part of.

Like many, I’ve been playing with the new ChatGPT from OpenAI since it was released a few weeks ago. As I understand it (and I don’t, really, at any deep technical level), it’s an computational engine that uses predictive text from a massive database of text. Ask it a question and it quickly answers it. Ask for a story and it writes it. Ask for a poem or a play (See my skit at the top of the page) or an essay, or even lines of computer code — it will generate it.

It’s not always correct (The Lightning Thief response looks good but has lots of errors related to a reading of the text itself) but the program is impressive in its own imperfect ways, including that it had access to the Rick Riordan story series in its database to draw upon. And, as powerful as it is, this current version of ChatGPT may already be out of date, as I think the next version of it is in development (according to the hosts at Hard Fork), and the next iteration will be much faster, much larger in terms of scale of its database, and much “smarter” in its responses.

Can you imagine a student taking a teacher prompt assignment and putting it into the Chat engine, and then using the text as their own as classroom submission? Maybe. Probably. Will that be plagiarism? Maybe.

Or could a student “collaborate” with the Chat engine, using the generative text as a starting point for some deeper kind of textual writing? Maybe. Probably. Could they use it for revision help for a text they have written? Maybe. Probably. Right now, I found, it flattens the voice of the writing.

Could ChatGPT eventually replace the need for teachers? Maybe, although I doubt it (or is that just a human response?)

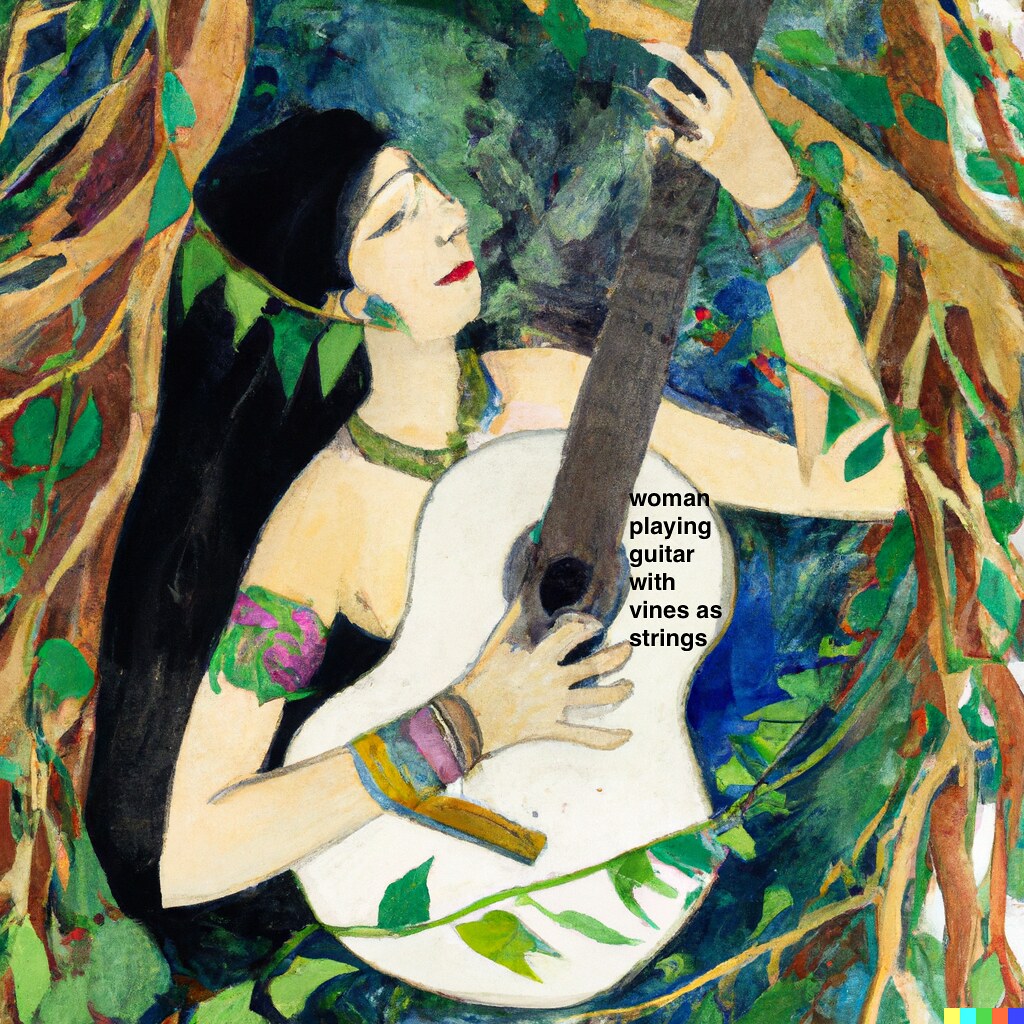

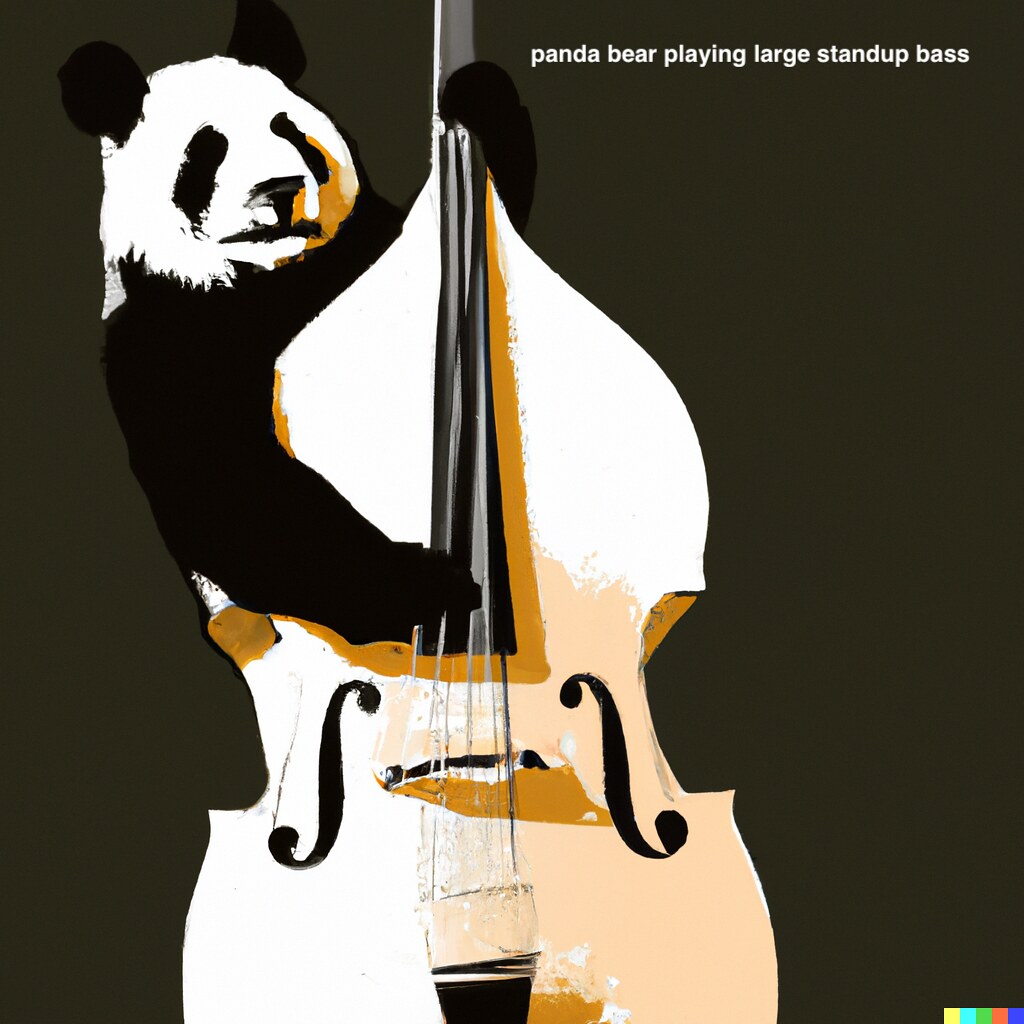

But, for educators, it will mean another reckoning anyway. Machine Learning-generated chat will force us to reconsider our standard writing assignments, and reflect on what we expect our students to be doing when they writing. It may mean we will no longer be able to rely on what we used to do or have always done. We may have to tap into more creative inquiry for students, something we should be doing anyway. More personal work. More nuanced compositions. More collaborations. More multimedia pieces, where writing and image and video and audio and more work in tandem, together, for a singular message. The bot can’t do that (eh, not yet, anyway, but there is the DALL-E art bot and there’s a music/audio bot under development and probably more that I don’t know about.)

Curious about all this, I’ve been reading the work of folks like Eric Curts, of the Control Alt Achieve blog, who used the ChatGPT as collaborator to make his blog post about the Chat’s possibilities and downsides. I’ve been listening to podcasts like Hard Fork to get a deeper sense of the shift and fissures now underway, and how maybe AI Chats will replace web browser search engines entirely (or not). I’ve been reading pieces in the New York Times and the Washington Post and articles signalling the beginning of the end of high school English classes. I’m reading critical pieces, too, noting how all the attention on these systems takes away from the focus on critical teaching skills and students in need (and as this post did, remind me that Machine Learning systems are different from AI)

And I’ve been diving deeper into playing more with ChatGPT with fellow National Writing Project friends, exploring what the bot does when we post assignments, and what it does when we ask it to be creative, and how to try push it all a bit further to figure out possibilities. (Join is in the NWPStudio, if you want to be part of the Deep Dive explorations)

Yeah, none of know really what we’re doing, yet, and maybe we’re just feeding the AI bot more information to use against us. Nor do we have a clear sense of where it is all going in the days ahead, but many of us in education and the teaching of writing intuitively understand we need to pay attention to this technology development, and if you are not yet doing that, you might want to start.

It’s going to be important.

Peace (keeping it humanized),

Kevin